Artificial Confocal Microscopy for Deep Label-Free Imaging; Nat. Photon. 17, pages 250-258

Xi Chen, Mikhail E. Kandel, Shenghua He, Chenfei Hu, Young Jae Lee, Kathryn Sullivan, Gregory Tracy, Hee Jung Chung, Hyun Joon Kong, Mark Anastasio & Gabriel Popescu

Nature Photonics 17, 250–258 (2023) 2023

![]()

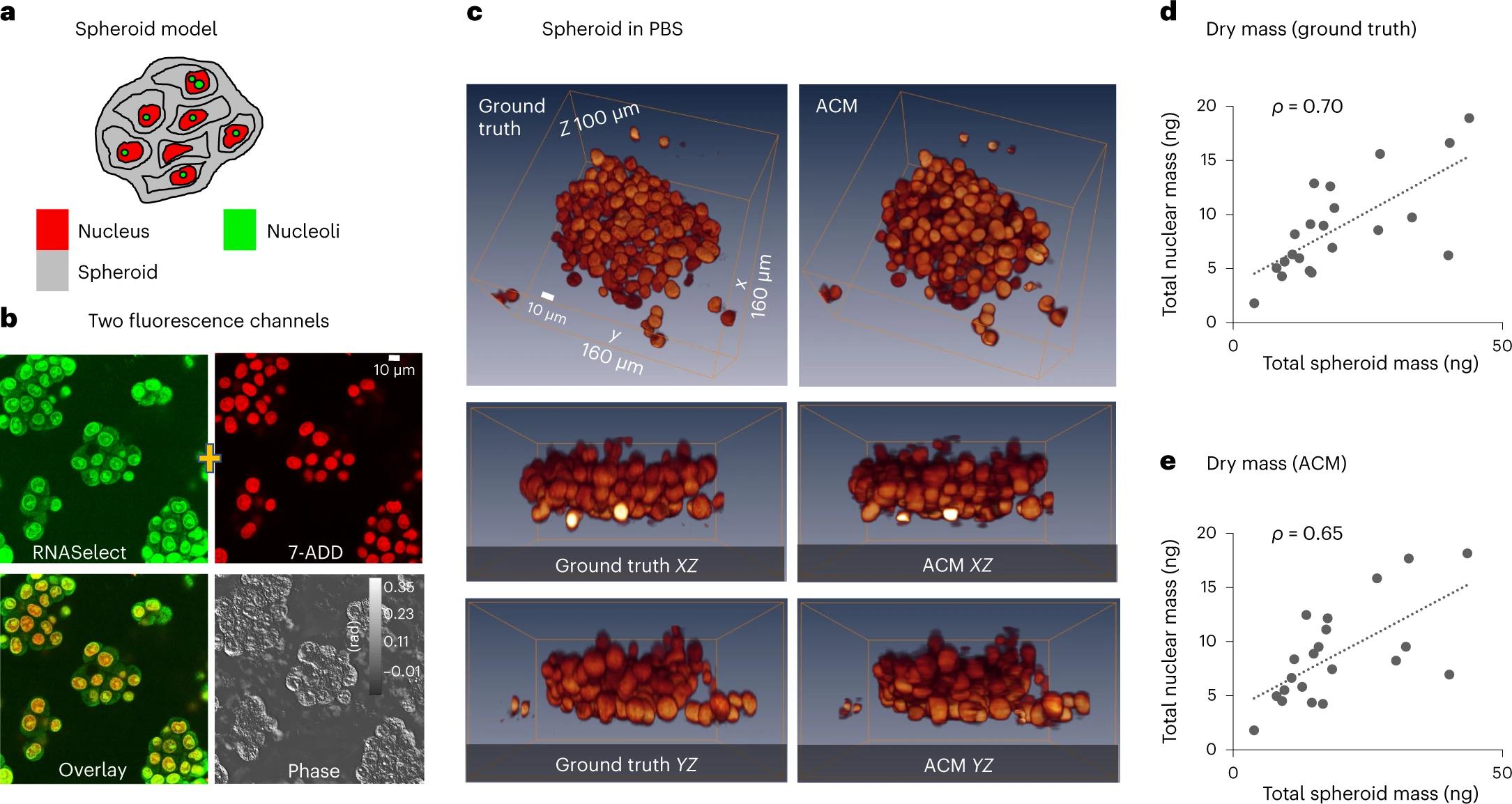

Wide-field microscopy of optically thick specimens typically features reduced contrast due to spatial cross-talk, in which the signal at each point in the field of view is the result of a superposition from neighbouring points that are simultaneously illuminated. In 1955, Marvin Minsky proposed confocal microscopy as a solution to this problem. Today, laser scanning confocal fluorescence microscopy is broadly used due to its high depth resolution and sensitivity, but comes at the price of photobleaching, chemical and phototoxicity. Here we present artificial confocal microscopy (ACM) to achieve confocal-level depth sectioning, sensitivity and chemical specificity non-destructively on unlabelled specimens. We equipped a commercial laser scanning confocal instrument with a quantitative phase imaging module, which provides optical path-length maps of the specimen in the same field of view as the fluorescence channel. Using pairs of phase and fluorescence images, we trained a convolution neural network to translate the former into the latter. The training to infer a new tag is very practical as the input and ground truth data are intrinsically registered and the data acquisition is automated. The ACM images present much stronger depth sectioning than the input (phase) images, enabling us to recover confocal-like tomographic volumes of microspheres, hippocampal neurons in culture, and three-dimensional liver cancer spheroids. By training on nucleus-specific tags, ACM allows for segmenting individual nuclei within dense spheroids for both cell counting and volume measurements. In summary, ACM can provide quantitative, dynamic data, non-destructively from thick samples while chemical specificity is recovered computationally.